Spot AI

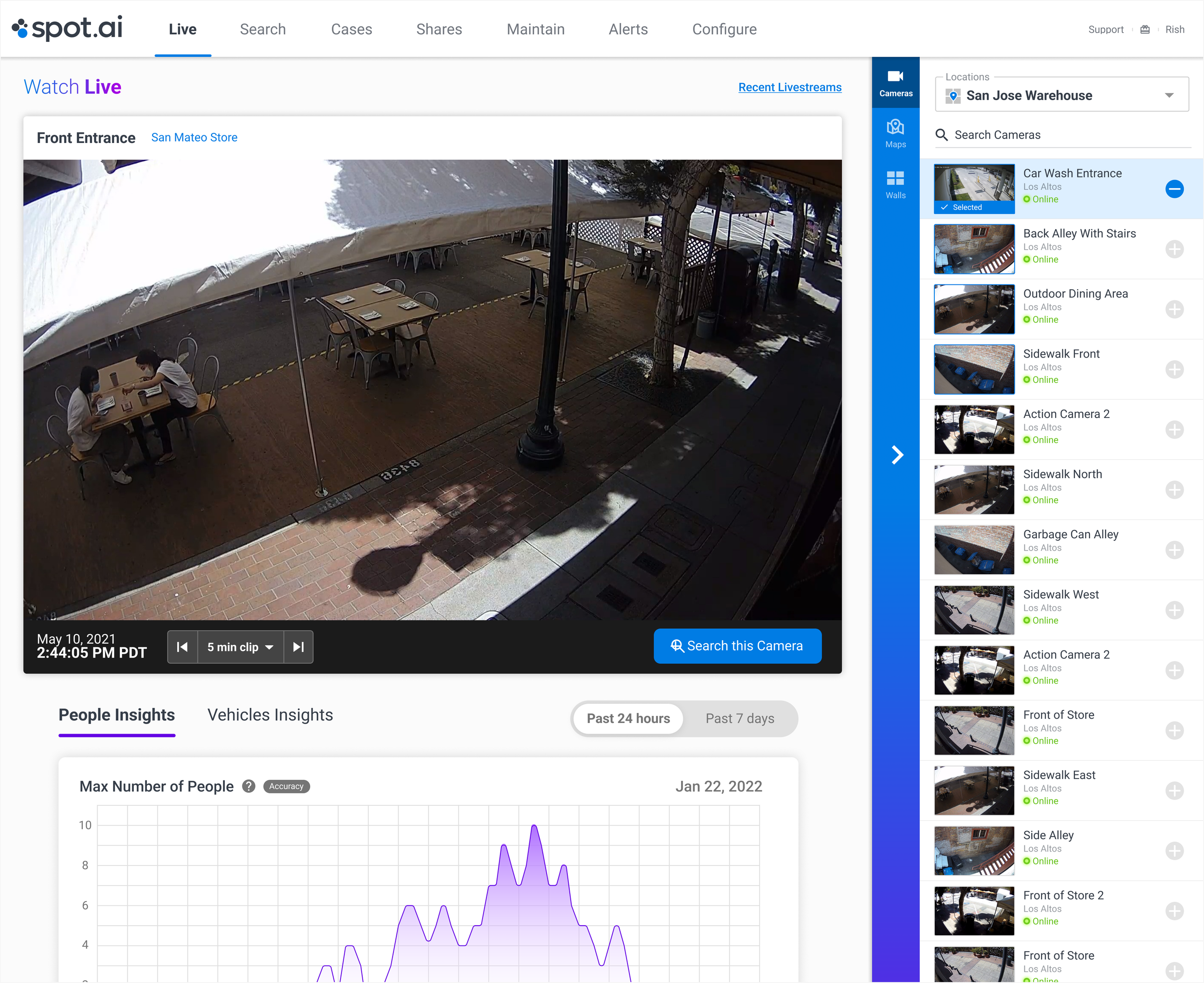

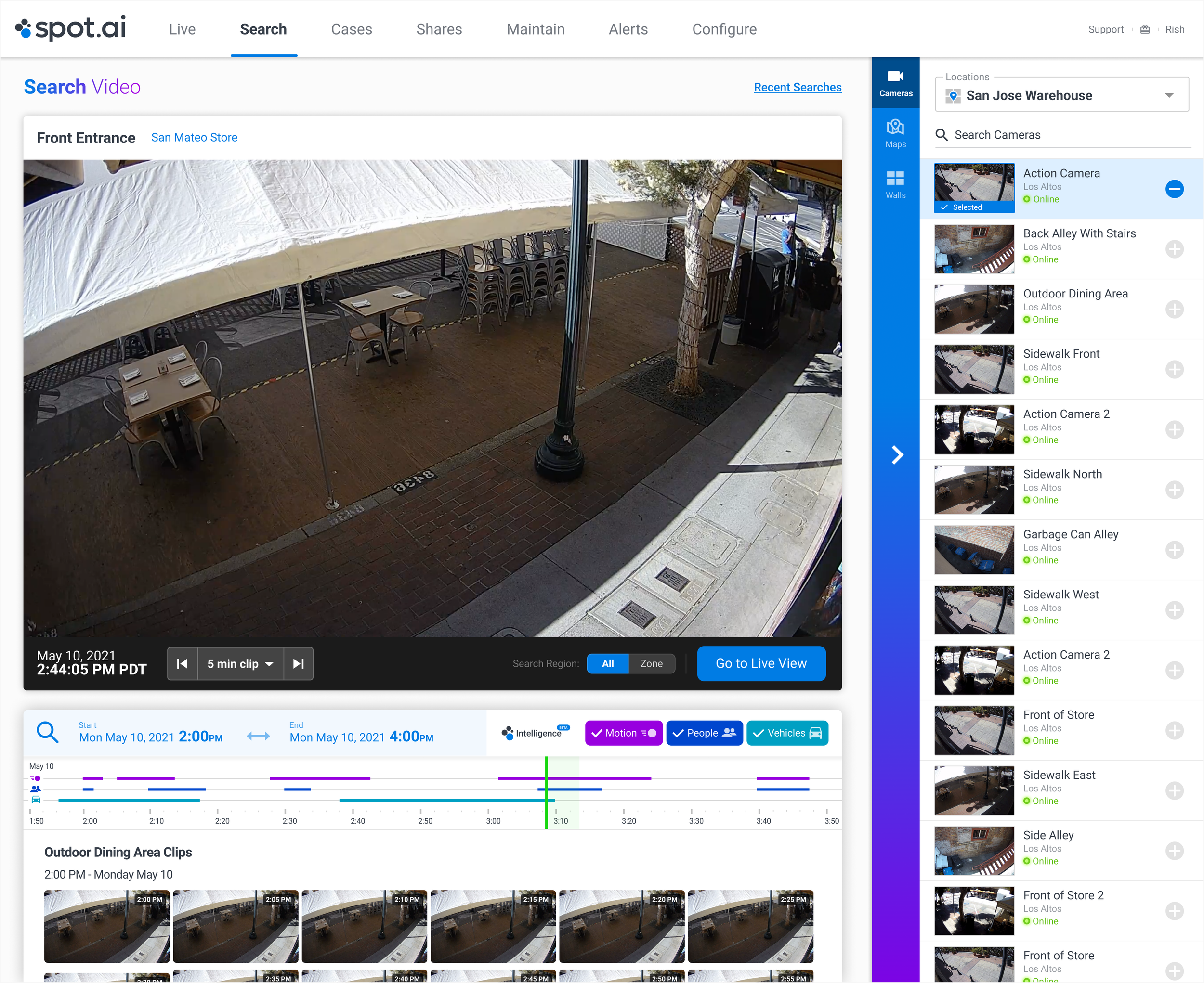

Live & Search Redesign

For Spot AI, I redesigned a video surveillance dashboard to unify live monitoring and historical search into a single, seamless experience. By introducing a timeline-based player and implementing a new design system, I shifted the product from two disconnected tools to a time-centered workflow that made investigations more intuitive and reduced context switching. The feature rolled out smoothly and received consistently positive user feedback, reinforcing that the new approach better supported real-world monitoring needs.

Context

I worked on a video surveillance dashboard used for live site monitoring and incident investigation. The platform supported real-time camera feeds along with tools to review past footage, but these experiences had evolved separately over time. As the product grew in complexity, it became clear that the core monitoring workflow felt fragmented and difficult to use, especially during time-sensitive investigations.

Problem

Live viewing and historical search existed as two different tools with different interfaces and mental models. Users had to leave the live feed to search for past activity, which caused them to lose context and slowed down investigations. The product felt like two systems stitched together, increasing cognitive load and making it harder for users to understand what had happened and when. The separation of experiences also limited how advanced features like activity detection could be integrated meaningfully.

Approach

I reframed the experience around time as the primary navigation model instead of treating “live” and “search” as separate modes. The core design move was introducing a unified timeline directly within the video player, allowing users to move fluidly between present and past activity in one continuous experience. In parallel, I helped implement a new design system to bring consistency and scalability across the product. My focus was on reducing context switching, simplifying complex workflows, and designing a foundation that could support future AI-driven features.

Output

The result was a unified video player that combined live monitoring, playback, and search. I designed an activity timeline that visualized motion, people, vehicles, and other signals directly on the playback bar. Filters were integrated into the viewing experience so users could refine what they were seeing without leaving their context. The redesign also aligned with a new design system, improving consistency across interactions and components.

Outcome

The new experience reduced cognitive load by eliminating the need to switch between tools and made investigations more intuitive by organizing video around time and activity. Users could move seamlessly between live monitoring and historical review, creating a more natural and efficient workflow. The redesign also laid the groundwork for future intelligent features and made the product feel like a cohesive monitoring system rather than a collection of separate tools. The feature rollout was smooth, and we received consistently positive feedback from users, reinforcing that the new approach better matched how they needed to work.